Use the Tabs below to find information on community projects. Project pages have links to the 'technology' and 'design flow' stages used and ways you can comment on or join a project.

- Projects are key to community hardware design

-

As a community hardware design activity we form our collaborations around shared design actions making Projects a core of the SoC Labs community. Projects help us share and reuse hardware and software developments around core Arm IP to help us in our research goals.

- A project, takes technology and uses a design flow to make a SoC.

-

A project has a timeframe and uses the two other significant aspects of a SoC development, the selection of technology or IP blocks that make up the SoC and the design flow that is followed from specification through to final instantiation of a system. Any System On Chip usually involves the use of pre-existing IP blocks. A project team can select IP from the technology section of the site during the first stage of a design flow, Architectural Design. Later stages in the design flow support the creation of the novel aspects of the SoC design.

- Projects have a type, either active, complete (case study) or being formulated (request for collaboration)

-

Sharing information on projects much earlier than traditional academic collaboration is encouraged. Historically knowledge sharing has been at the end of the research activity, with published papers and results. As well as write up of finished projects ("case study"), ongoing projects under development ("projects") there are projects that are still being formulated ("request for collaboration") listed. We want to encourage people to engage with the project teams, for example, adding a comment to a specific project page or joining a project.

Latest Reference Design Projects

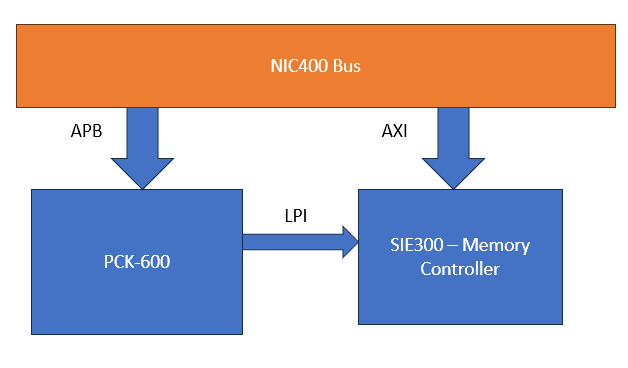

The PCK600 Arm IP provides components to allow a power control infrastructure to be distributed in a SoC in order to make a design energy efficient. Arm provide the IP as part of their Power Control System Architecture that can be used to control the power states of various parts of the system. This control of the power infrastructure is achieved through the use of the Power Policy Unit (PPU). This unit has an APB interface to allow for software control, and some low power interfaces that can connect to the power controllable IP within the system.

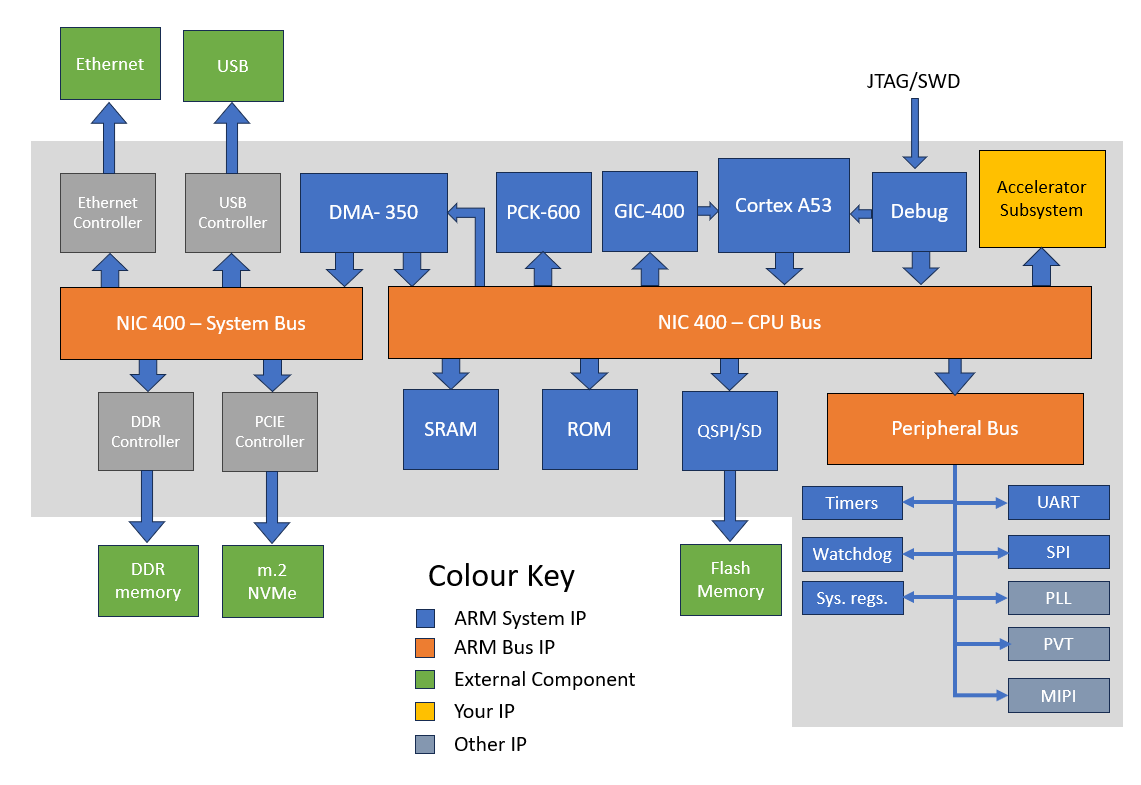

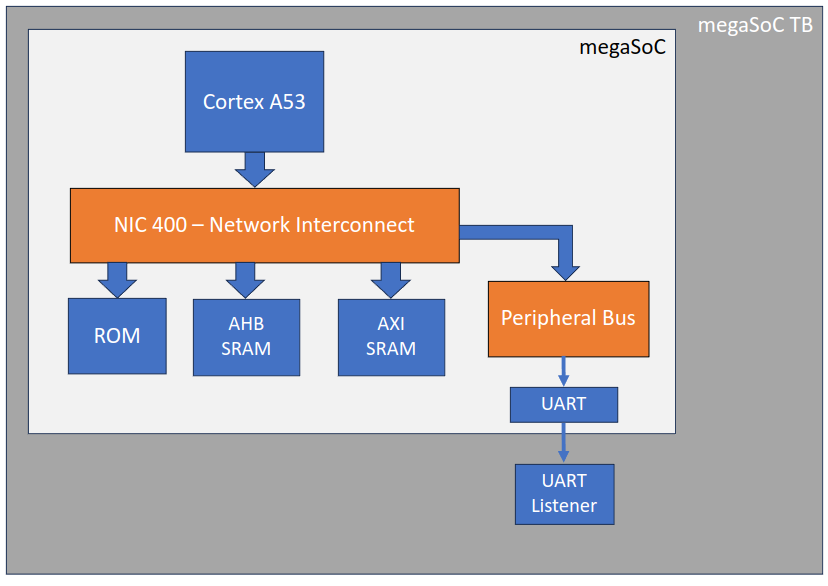

megasoc has been designed to provide a complex SoC component that can 'host' and support the development and evaluation of research components or subsystems. The design allows for seamless transition from FPGA to physical silicon implementation via a pre-verified programmable control system that allows reuse of software and diagnostic functionality to facilitate the configuration, control and diagnostic analysis of research hardware such as custom accelerators or signal processing.

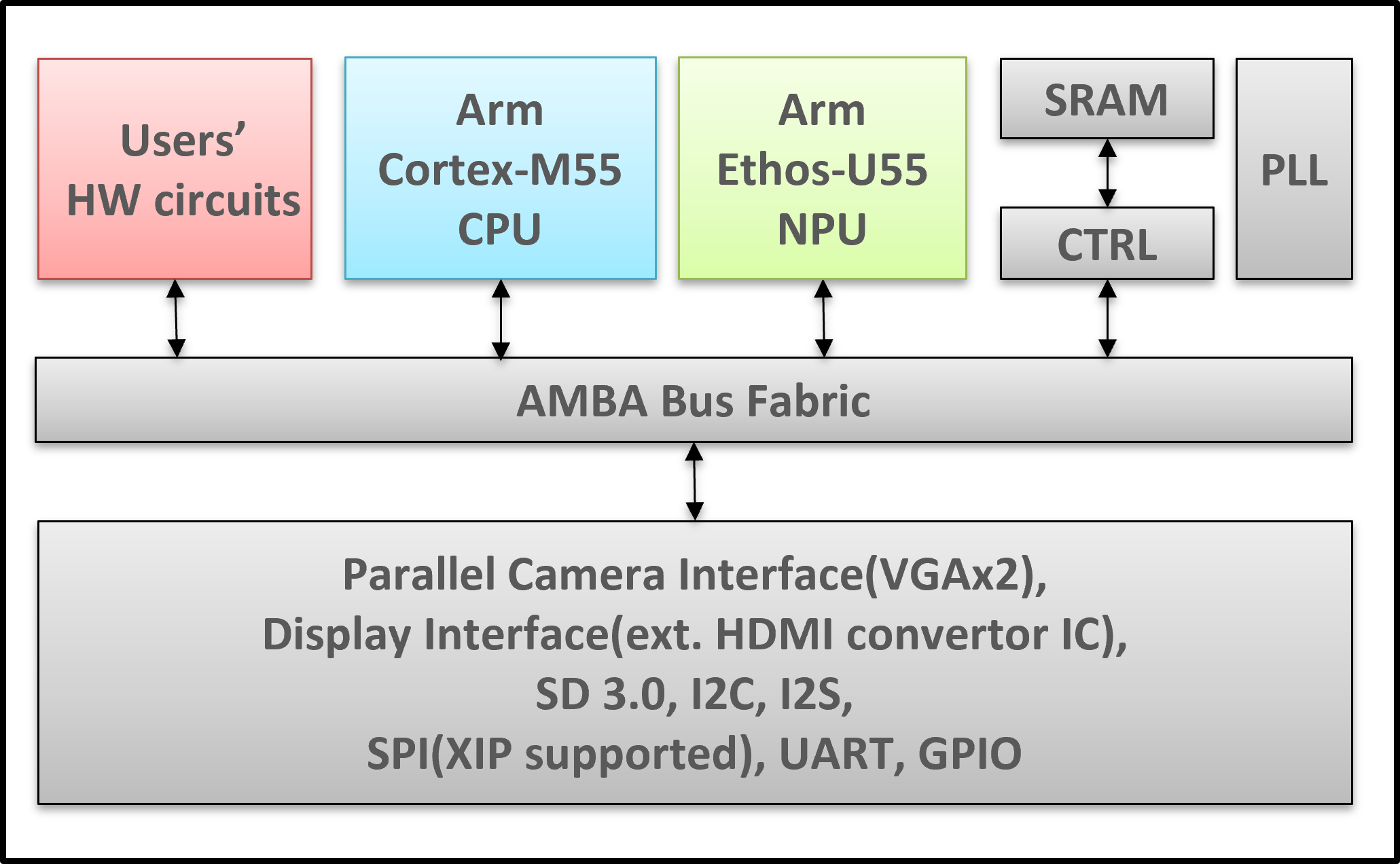

The Arm Cortex-M55 AIoT SoC design platform is an AIoT subsystem that allows custom SoC designers to integrate their hardware circuits and embedded software for differentiation. The platform is developed by TSRI (Taiwan Semiconductor Research Institute) to support academic research on SoC design. It's built on the Arm Corstone-300 reference package, featuring the Cortex-M55 CPU and Ethos-U55 NPU.

Latest Collaborative Projects

This program is dedicated to the development of a System on Chip (SoC) platform, specifically designed to support learning and research activities within Indonesian academic institutions. The platform serves as an educational and research tool for students, lecturers, and researchers to gain hands-on experience in digital chip design.

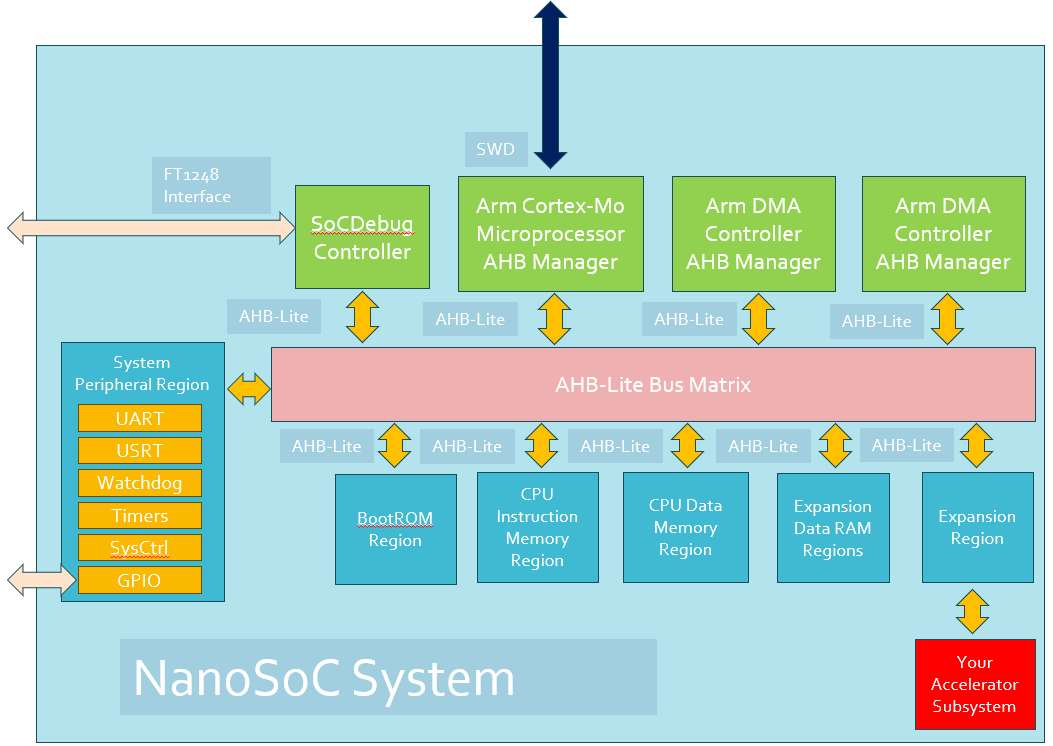

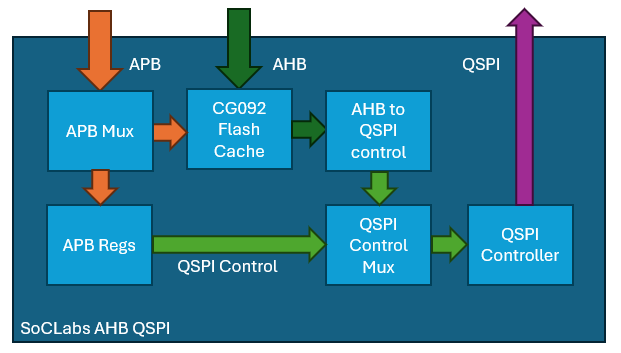

The instruction memory in the first tape out of nanosoc was implemented using SRAM. The benefit was the read bandwidth from this memory was very fast, the downside was on a power-on-reset, all the code was erased as SRAM is volatile memory. An alternative use of non-volatile memory would benefit applications where deployment of the ASIC does not allow, or simply time is not available for programming the SRAM after every power up.

There is growing interest within the SoC Labs community for an Arm A-Class SoC that can support a full operating system, undertake more complex compute tasks and enable more complicated software directed research. The Cortex-A53 is Arm's most widely deployed 64-bit Armv8-A processor and can provide these capabilities with power efficiency.

This project aims to design and implement a high capacity memory subsystem for Arm A series processor based SoC designs. The current focus of the project is the design and implementation of a Memory Controller for DDR4 memory.

Latest Competition Projects

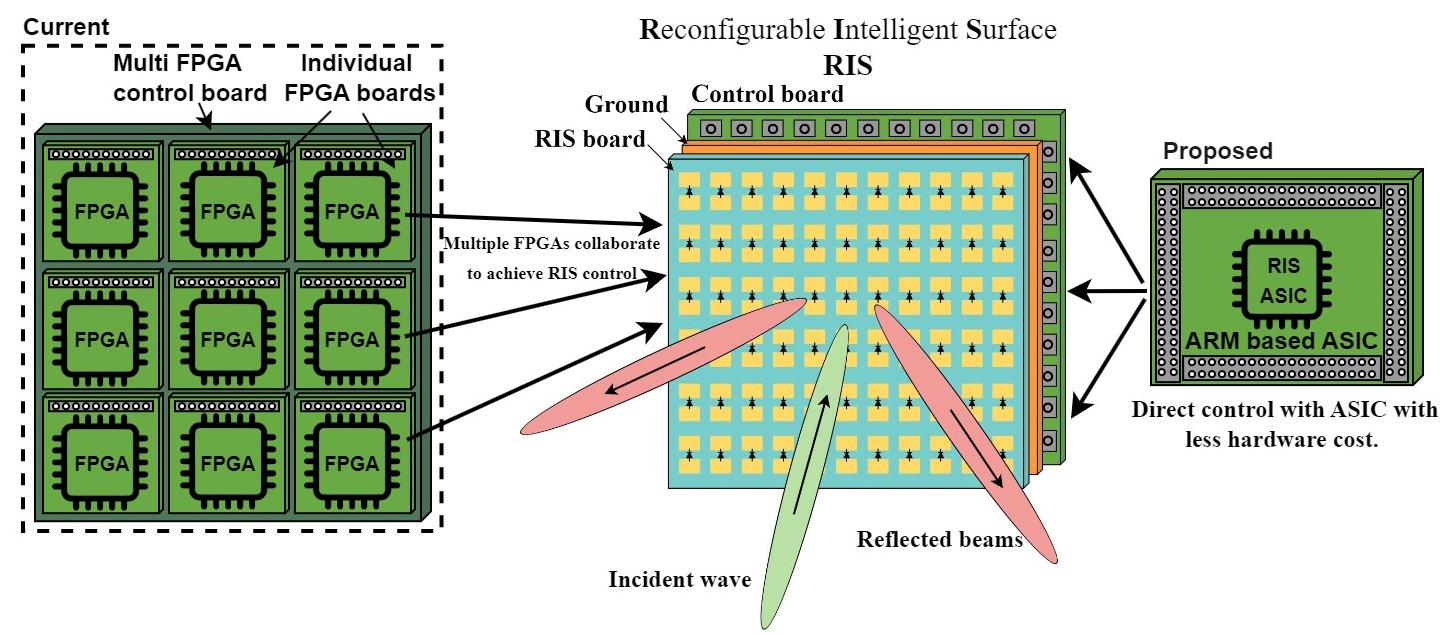

Reconfigurable Intelligent Surfaces (RIS) are planar structures composed of large arrays of tunable elements that can dynamically redirect, reflect, or shape wireless signals in the environment.

This project aims to develop an advanced RF energy harvesting (EH) receiver chip specifically designed to power embedded sensors for monitoring the condition of groceries during transportation. The receiver chip captures wireless energy transmitted from phased array antennas and converts it into electrical power that is used to operate onboard sensors, which continuously monitor critical parameters such as temperature and humidity inside delivery trucks.

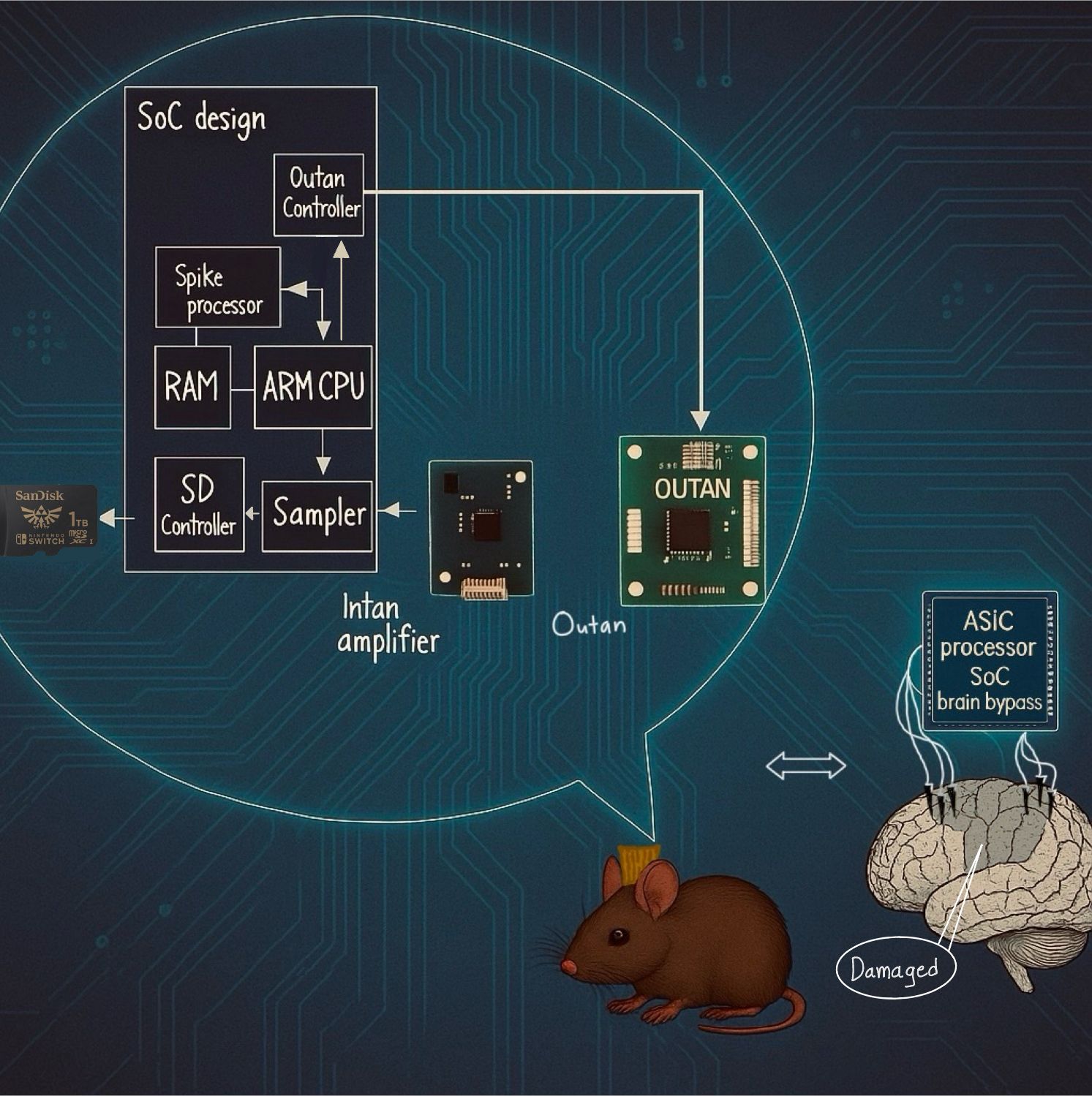

Stroke and epilepsy are among the most common debilitating neurological conditions, with a worldwide prevalence of 100 million people (World Stroke Organization, 2022) and 50 million people (World Health Organization, 2024), respectively. Present-day approaches for treating neurological and neurosurgical conditions include physiotherapy, pharmacological treatment, surgical excision, and interventions such as deep brain stimulation.

In the context of Industry 4.0, handwritten digit recognition plays a vital role in numerous applications such as smart banking systems and postal code detection. One of the most effective approaches to tackle this problem is through the use of machine learning and neural network models, which have demonstrated impressive accuracy and adaptability in visual pattern recognition tasks.

Latest Completed Project Milestones

| Project | Name | Target Date | Completed Date | Description |

|---|---|---|---|---|

| Geographical support for Canada | Workshop: Simplifying System-on-Chip (SoC) design and fabrication with Cadence workflow and ARM Reference Architecture |

The workshop will cover the complete SoC development lifecycle, looking not only at how to undertake an Arm based SoC design but providing help to simplify all the tasks associated with it. This includes how to maintain the EDA tool environment, how to utilise foundry PDKs for physical design leading to silicon validation of a design and how to work through all the steps successfully.

The workshop will use the SoC Labs freely available nanoSoC reference design, an Arm-based microcontroller SoC, and demonstrate how silicon-proven Cadence workflows and a GlobalFoundries technology node can be used to fabricate a design. nanoSoC has been taped out successfully with minimal cost and by individual Masters and PhD students. The workshop will also share some details of more ambitious SoC reference designs and highlight some custom accelerator integration examples for AI/ML workloads that can be used for more ambitious research projects. Details are here |

||

| Sensing for Precision Agriculture | Technology Selection |

Aim: Select and obtain a PDK.

Fallback: SKY130 open source PDK with Cadence MPW |

||

| megasoc re-usable SoC platform | Getting Started | |||

| megasoc re-usable SoC platform | Technology Selection | |||

| megasoc re-usable SoC platform | Specifying a SoC | |||

| megasoc re-usable SoC platform | Architectural Design |

Overall architecture of megaSoC |

||

| megasoc re-usable SoC platform | IP Selection | |||

| Test draft | Getting Started |

Test |

||

| Geographical support for Canada | Webinar, Make Academic System on Chip Projects Easy via Collaboration and Reusable Design. |

How the broad range of IP freely available via Arm Academic Access combined with academic-focused reusable reference designs and tape out flows are making System on Chip projects easier to undertake by individual students and larger academic teams. Examples of projects being developed by the SoC Labs academic community. A specific call for participation from Canada, SoC Labs and Arm AAA will support you in developing your System on Chip projects, especially if you are an academic new to SoC and ASIC design. |

||

| Sensing for Precision Agriculture | Gain Familiarity with Cadence Workflow |

Using full Cadence Design Tools workflow for synthesis, implementation, etc. to build up team knowledge of associated processes, in preparation for using this workflow on our RTL..

|

Daniel Newbrook

Daniel Newbrook

David Flynn

David Flynn

Trio Adiono

Trio Adiono

Srimanth Tenneti

Srimanth Tenneti

Zhicheng Shen

Zhicheng Shen

Fidel Makatia

Fidel Makatia

The Anh Nguyen

The Anh Nguyen